Designing the User Experience of the Harry Potter Style "Sorting Hat" was Hella Fun

Science Hack Day San Francisco • 48 hours (Oct 24-25, 2015)

Overview

The Sorting Hat was created within 48 hours by a 9-person ad-hoc team. It does everything in the Harry Potter movie ought to, except read the wearer’s mind. By integrating IBM Watson, Natural Language Classifier (NCL), Raspberry Pi 2B+ project board, Adruinos, YUN, servos, LEDs, microphones, speaker, batteries and speech-to-text/text-to-speech services, the hat is able to digest the wearer's characteristics via voice input, and sorts the wearer into the most appropriate house they belongs to.

With the help of storyboarding, character enhancements with proper combination of audio and facial expressions, and visual feedback, various stages of the hat-wearing experience were humanized within given hardware constraints. Out of 32 final entries, Sorting Hat won the "Defense Against The Dark Arts Award".

My Role

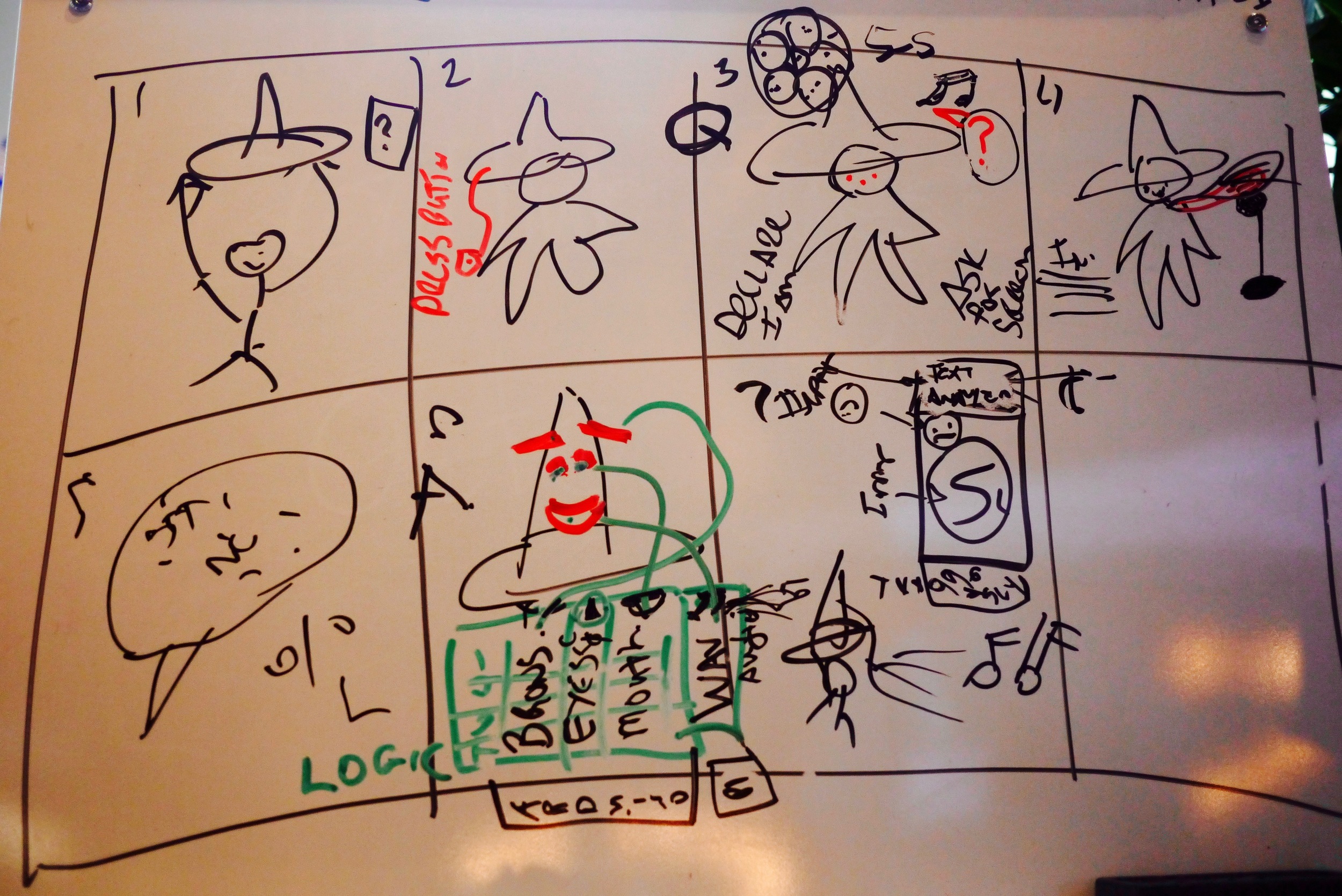

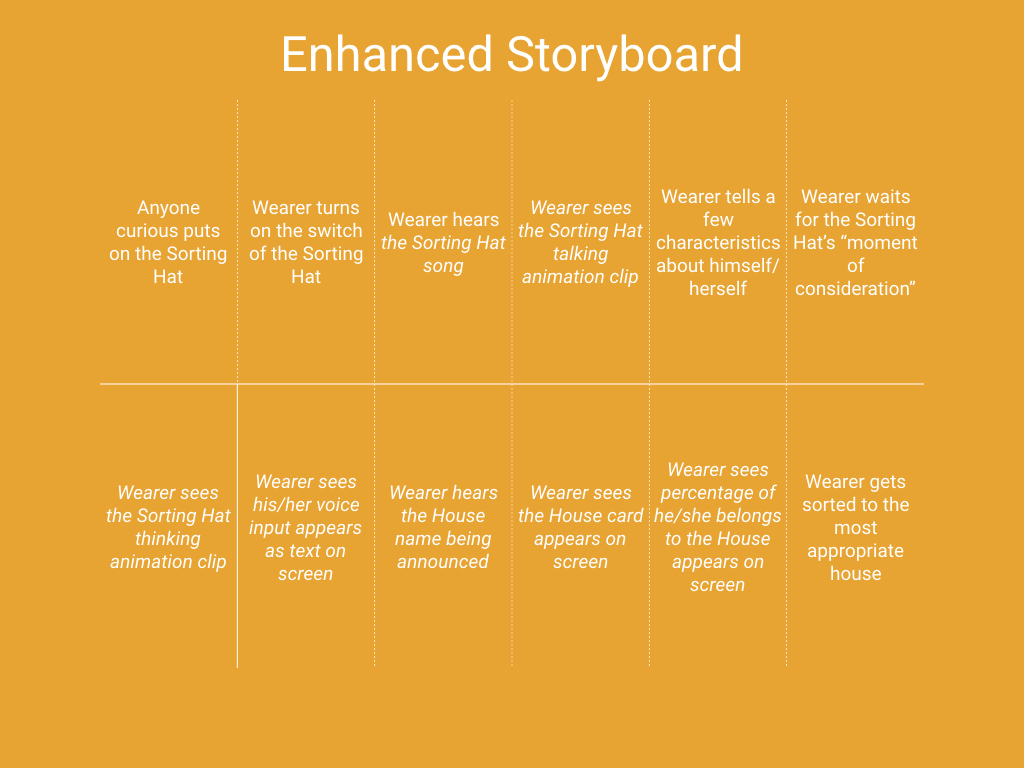

Storyboarding

Character Design

Presentation Slides

Poster

The Story

The Sorting Hat made its appearance on the first day of Hogwarts new school year, each new student must wear it to determine which of the four school Houses they should go to.

Ryan Anderson, inspired by his 7-year-old Harry Potter fan Taylor, decided to build a real Sorting Hat within 48-hour at Science Hack Day San Francisco 2015. We, a 9-person ad-hoc team, were on a mission to to make the hat “think” and “talk”.

Storyboarding

The team needed a holistic view of end-to-end flow, when and how hardware and services would be needed to communicate with each other to make what happened

Humanize The Hat

Thinking from the wearers’ perspective, some “magical touch” that simulated the Harry Potter scene would help to humanize the hat-wearing experience. Hence I buried myself with Harry Potter wiki, the movie scene video and related sound tracks to gather appropriate materials that fit.

Constraints: One of the LED stopped working later on. So we used 3 colors to differentiate 4 Houses.

My Hack

used Youtube converter web app to rip the audio off from related movie scenes

opened audio in QuickTime

scouted the desired moments

trimmed the audio clips down to desired length

converted .m4a to .wav for audio file compatibility concern

Sample Voice Expression Before & After

All the audio files and the facial expressions were synchronized with this presentation to give wearer visual feedback:

Take a look at how the hat works in our first powered test:

Post-Hacking Wishlist for A Better Hat-Wearing Experience

As I noticed in our user testings and the demo, the “moment of consideration” varied depending on the network and the complexity of algorithm. People got easily anxious while waiting.

Even if we included movie clips or hat’s facial movements in those moments, the wearer couldn’t see them and we didn’t always have access to a projector.

It’d be nice if we could incorporate the holographic technology to let the wearer see the hat’s facial expression changing or the related movie clips playing.

It could not only constantly keep them in the context, but also keep them entertained. The anxiety of “a watched pot never boils” would be easily taken away.

this was a team Effort:

Ryan Andersen, Solution Architect

Taylor, 7-year-old scientist-in-training

Christopher Swanson, Software Engineer

David Uli, Software Engineer

Erik Danford, Learning Technologist, Aerial Videography Drone Operator

Jordan Hart, Learning Experience Designer

Jack Sivak, Robotics/Software Engineer

Ogi Todic, Software Engineer

Xiaomin Jiang, UX Designer